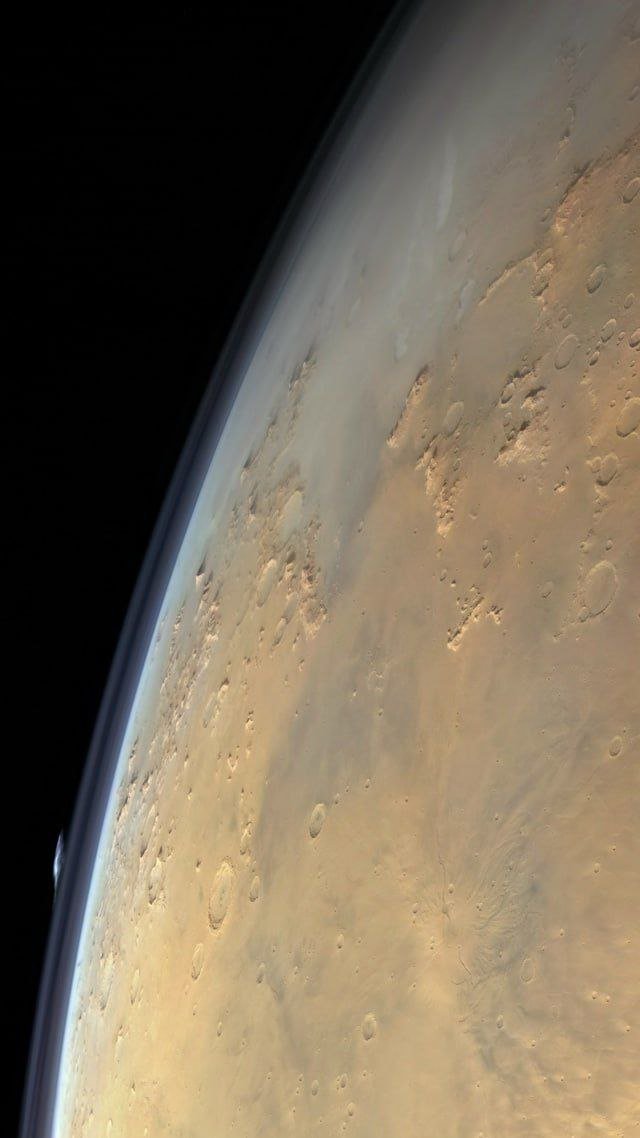

On July 17, 1969, a remarkable image of Earth was taken during the Apollo 11 mission to the Moon. The photograph, capturing our planet from space, has become an iconic symbol of human exploration and achievement.

The image serves as a powerful reminder of the historic moment when astronauts first set foot on the Moon, showcasing the beauty and fragility of our planet from a unique perspective in space.